This blog post isn’t really about something specific. I recently stumbled onto a backup drive that had a folder containing Adium logs from a MacBook Pro I used around 2011-2012. I had a long weekend, so I thought I’d spend it importing those logs to Pidgin (which is what I currently use) and just blog about it.

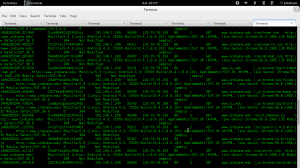

First I had to do some analysis to see the differences between the logs.

First get all the XML and copy them to directories of the same name

First create the folders for each email account (the folder will have the format of just the email address):

$find [$PATH]/ | grep -o -P "([$PATH]\/[\w\W]+?@[\w\W]+?\/)+?" | sed 's/[$PATH]\///1' > account_folders

Remember to place back slashes for any forward slash under grep and sed (e.g. if $PATH is /home/yourName, the command will be :

$find /home/yourName/ | grep -o -P "(\/home\/yourName\/[\w\W]+?@[\w\W]+?\/)+?" | sed 's/\home\/yourName\///1'

$sort account_folders > sorted_account_folders

$uniq sorted_account_folders |xargs -I{} mkdir {}

move all xml files to the locations

$find [$PATH] | grep \.xml$ >all_xml_files

$grep -o -P "(\[$PATH]\/GTalk.[EMAIL_ADDRESS]\/[\w\W]+?@[\w\W]+?\/)+?" all_xml_files | sed -s 's/[$PATH]///1' > all_folders_destination

$awk 'NR==FNR{a[FNR""]=$0;next;}{print "cp","\""a[FNR""]"\"",$0}' all_xml_files all_folders_destination> command

let’s do a quick test

$head -1 command | bash

let’s check that we have all the xml files from the original

$bash command

$ find . | grep \.xml$ | wc -l 445 $ find [$PATH]/ | grep \.xml$ | wc -l 445

xml files in the source directory are equal to the destination directory

good

now let’s change the xml file names to match those in pidgin

we want to replace this format:

/username@domain (YYYY-MM-DDThh.mm.ss-GMT-DIFFERENCE).xml

with this:

YYYY-MM-DD.hhmmss-GMT-DIFFERENCE.txt

e,g.

test@test.edu (2011-09-14T15.11.42-0400).xml

2011-09-15.151142-0400.txt

first of all strip the username and remove the paranthesis

Strinp everything except for the timestamp (date, time and timzeon)

$cat all_xml_files | grep -v \._ > all_xml_files_2

$cat all_xml_files_2 | grep -o -P "\(\d\d\d\d-\d\d-\d\dT\d\d\.\d\d\.\d\d-\d{4}\)\.xml$" > only_names

$sed -r 's/\(//' only_names > only_names_

$sed -r 's/\)//' only_names_> only_names__

Remove the T and replace with a “.”

$sed -r 's/T/\./' only_names__ > only_names___

replace the .xml extension with .txt

$sed -r 's/\.xml//' only_names___ > only_names____

now you finally have the full file format that is used in pidgin

$mv only_names____ only_names

now change the timezone in the filename since I was using this laptop in one location (Indiana) , all I need to worry about is EDT or EST

modify the date format

$cat only_names | grep -o -P '(\d{4})' | grep -o -P '(\d)+' | awk '$1 == 0400 {print "EDT"}$1 == 0500 {print "EST"}' > timezones

$awk 'FNR==NR{a[FNR""]=$0;next}{print a[FNR""]$0".txt"}' only_names timezones > true_filename

$awk 'FNR==NR{a[FNR""]=$0;next}{print a[FNR""]$1}' all_folders true_filename > destination_files

also make sure you sort, otherwise you will get discrepancies

$sort destination_files >sorted_destination_files

$sort all_xml_files_2 > sorted_xml_files

$wc -l sorted_destination_files

375

$wc -l all_xml_files_2

375

$awk 'FNR==NR{a[FNR""]=$0;next}{print "cp","\""a[FNR""]"\"",$1}' sorted_xml_files sorted_destination_files > move_command

$bash move_command

delete all .xml files here

find . | grep \,xml$ | xargs -I{} rm {}

now that we have the filenames in order, time to change the content of the file: I thought first I would do this using awk or sed, but then decided on python since it has a pretty neat htmlparser

#!/usr/bin/python

import traceback

from HTMLParser import HTMLParser

import sys

#class used to parse html data from the Adium Logs (techincally they are xml, but this will do)

class MyHTMLParser(HTMLParser):

def __init__(self):

HTMLParser.__init__(self)

self.output =""

def handle_starttag(self, tag, attrs):

for attr in attrs:

#append the sender

if "alias" in attr:

self.output = self.output +str(attr[1]+": ")

elif "time" in attr:

#append the time

time_str = attr[1]

time_str= time_str.replace("T"," ")

self.output = self.output + "("+time_str+") "

def handle_data(self, data):

#append the message

self.output = self.output + str(data)

return

def clean_string(fileName):

try:

#instantiate an html parser

parser = MyHTMLParser()

#open the text file taken from the argument and read all the lines

# line by line parse the file and retrieve the time, the sender and the message

#the variable output in the object will contain the filtered content

#all content will be appended to this variable

with open(fileName,"r") as ifile:

for line in ifile:

parser.feed(line)

#open the same file for writing, clear it's contents and write parser.output to it (which is the filtered content of the file)

with open(fileName,"w+") as ifile:

#just print the entire parser.output string to the file

ifile.write(parser.output,)

except Exception ,e:

print(traceback.format_exc())

#take the name of the file (including its path) and pass it to clean_string function

clean_string(sys.argv[1])

test the program… ok it works:

run the pythong file on all the txt files:

$find . | grep \.txt$ | xargs -I{} python clean_file.py {}

now move those text files to the pidgin log directory usually a copy-pase with the merge command using the GUI would do …